一、概述

1.1 Binder架构

Android内核基于Linux系统,而Linux系统进程间通信方式有很多,如管道,共g享内存,信号,信号量,消息队列,套接字。而Android为什么要用binder进行进程间的通信,这里引用gityuan在知乎上的回答:

(1)从性能的角度数据拷贝次数

Binder数据拷贝只需要一次,而管道,消息队列,Socket都需要二次,但共享内存连一次拷贝都不需要;从性能角度看,Binder性能仅次于共享内存。

(2)从稳定性的角度

Binder基于C/S架构,Server端和Client端相对独立,稳定性较好,而共享内存实现方式复杂,需要考虑到同步并发的问题。从稳定性方面,Binder架构优于共享内存。

(3)从安全的角度

传统Linux进程间通信无法获取对方进程可靠的UID/PID,无法鉴别对方身份;而Android为每个应用程序分配UID,Android系统中对外只暴露Client端,Client端将任务发送给Server端,Server端会根据权限控制策略,判断UID/PID是否满足访问权限。

(4)从语言层面的角度

Linux是基于C语言,而Android是基于Java语言,Binder符合面向对象的思想,Binder将进程间通信转化为通过对某个Binder对象的引用调用该对象的方法。Binder对象作为一个可以跨进程引用的对象,它的实体位于一个进程中,而它的引用却可以在系统的每个进程之中。

(5)从公司战略的角度

Linux内核源码许可基于GPL协议,为了避免遵循GPL协议,就不能在应用层调用底层kernel,Binder基于开源的OpenBinder实现,作者在Google工作,OpenBinder用Apache-2.0协议保护。

Binder架构采用分层架构设计,每一层都有不同的功能。

分层的架构设计主要特点如下:

- 层与层具有独立性;

- 设计灵活,层与层之间都定义好接口,接口不变就不会有影响;

- 结构的解耦合,让每一层可以用适合自己的技术方案和语言;

- 方便维护,可分层调试和定位问题

Binder架构分成四层,应用层,Framework层,Native层和内核层

应用层:Java应用层通过调用IActivityManager.bindService,经过层层调用到AMS.bindService;

Framework层:Jave IPC Binder通信采用C/S架构,在Framework层实现BinderProxy和Binder;

Native层:Native IPC,在Native层的C/S架构,实现了BpBinder和BBinder(JavaBBinder);

Kernel层:Binder驱动,运行在内核空间,可共享。其它三层是在用户空间,不可共享。

1.2 Binder IPC原理

Binder通信采用C/S架构,包含Client,Server,ServiceManager以及binder驱动,其中ServiceManager用于管理系统中的各种服务,下面是以AMS服务为例的架构图:

无论是注册服务还是获取服务的过程都需要ServiceManager,此处的ServiceManager是指Native层的ServiceManager(C++),并非指framework层的ServiceManager(Java)。ServiceManager是整个Binder通信机制的大管家,是Android进程间通信机制Binder的守护进程。Client端和Server端通信时都需要先获取ServiceManager接口,才能开始通信服务,查找到目标信息可以缓存起来则不需要每次都向ServiceManager请求。

图中Client/Server/ServiceManager之间的相互通信都是基于Binder机制,其主要分为三个过程:

1.注册服务:AMS注册到ServiceManager。这个过程AMS所在的进程(system_server)是客户端,ServiceManager是服务端。

2.获取服务:Client进程使用AMS前,必须向ServiceManager中获取AMS的代理类。这个过程:AMS的代理类是客户端,ServiceManager是服务端。

3.使用服务:app进程根据得到的代理类,便可以直接与AMS所在进程交互。这个过程:代理类所在进程是客户端,AMS所在进程(system_server)是服务端。

Client,Server,ServiceManager之间不是直接交互的,都是通过与Binder Driver进行交互的,从而实现IPC通信方式。Binder驱动位于内核层,Client,Server,ServiceManager位于用户空间。Binder驱动和ServiceManager可以看做是Android平台的基础架构,而Client和Server是Android应用层。

前面已经分析过第一第二个过程注册服务和获取服务,本文主要介绍第三个过程使用服务,以bindService过程为例。

1.3 bindService流程

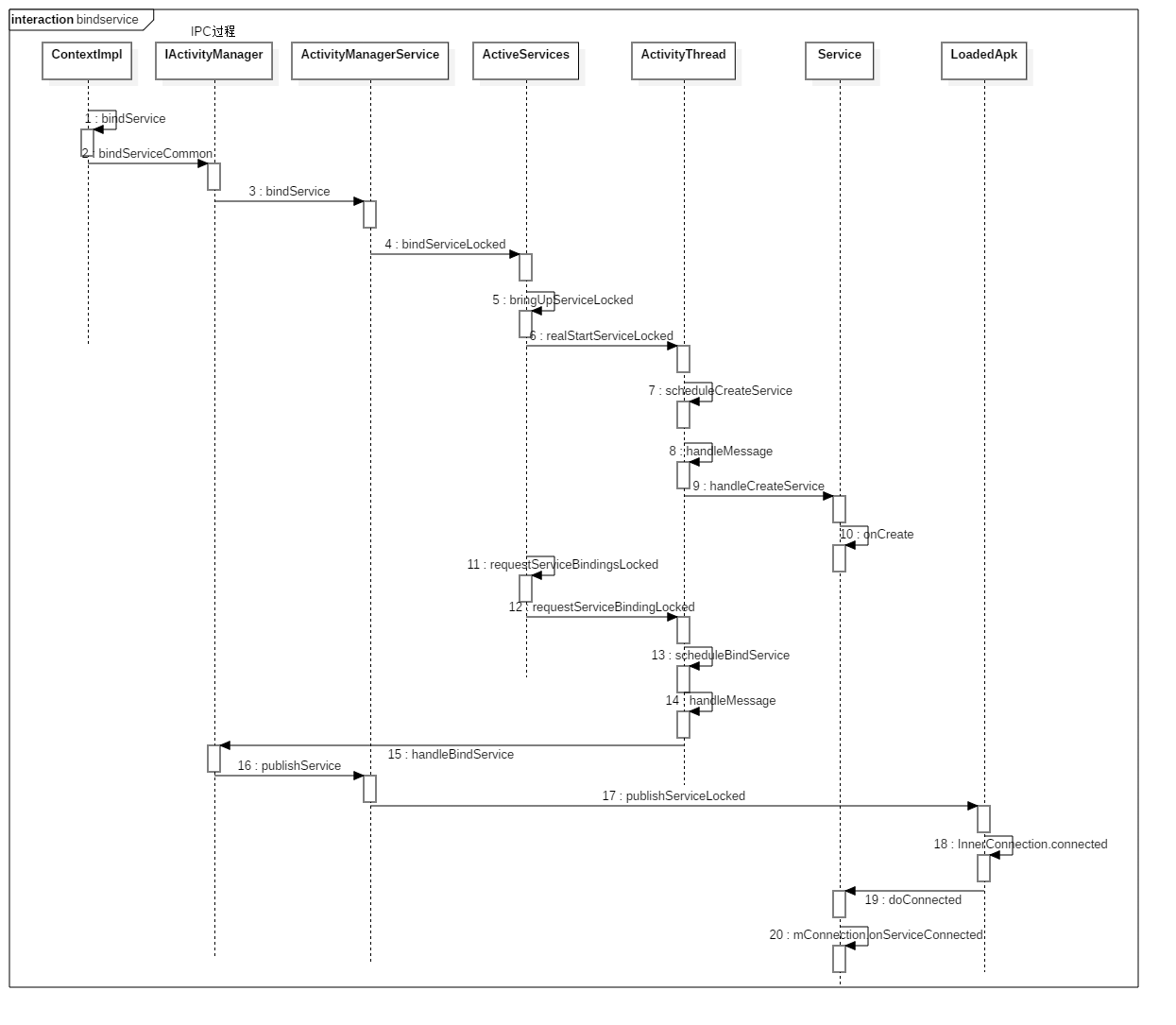

bindService流程如下图,从客户端调用bindService到服务器端通过ServiceConnected对象返回代理类给客户端,下面将从源码的角度分析这个过程。

二、客户端进程

2.1 CL.bindService

[->ContextImpl.java]

1 | @Override |

2.2 CL.bindServiceCommon

[->ContextImpl.java]

1 | private boolean bindServiceCommon(Intent service, ServiceConnection conn, int flags, Handler |

主要的工作如下:

创建对象LoadedApk.ServiceDispatcher对象

向AMS发送bindservice请求

2.2.1 getServiceDispatcher

[->LoadedApk.java]

1 | public final IServiceConnection getServiceDispatcher(ServiceConnection c, |

- mServices记录所有context里面每个ServiceConnection以及所对应的所对应的LoadedApk.ServiceDispatcher对象;同一个ServiceConnection只会创建一次,

- 返回的对象是ServiceConnection.InnerConnection,该对象继承于IServiceConnection.Stub。

2.2.2 ServiceDispatcher

[->LoadedApk.java]

1 | static final class ServiceDispatcher { |

getServiceDispatcher返回的是构造方法中的InnerConnection对象。

2.2.3 AM.getService

[->ActivityManager.java]

1 | public static IActivityManager getService() { |

在前面获取服务那篇文章中可以看出ServiceManager.getService(Context.ACTIVITY_SERVICE);等价于new BinderProxy(nativeData),这里的b相当于BinderProxy对象。

2.2.4 asInterface

[->IActivityManager.java]

1 | public static android.app.IActivityManager asInterface(android.os.IBinder obj) { |

2.2.5 创建Proxy

[->IActivityManager.java]

1 | private static class Proxy implements android.app.IActivityManager { |

这里mRemote为BinderProxy对象,通过mRemote向服务端传输数据。

writeStrongBinder、transact操作在注册服务那篇文章有详细的介绍,这里不再分析,向目标进程写入BINDER_WORK_TRANSACTION命令,下面进入服务端systemserver进程。

三、system_server进程

在进程的启动那篇文章15.2节中,systemserver进程启动时会启动binder线程

3.1 onZygoteInit()

[->app_main.cpp]

1 | virtual void onZygoteInit() |

3.1.1 startThreadPool

[->ProcessState.cpp]

1 | void ProcessState::startThreadPool() |

3.1.2 spawnPooledThread

[->ProcessState.cpp]

1 | void ProcessState::spawnPooledThread(bool isMain) |

3.1.3 new PoolThread

[->ProcessState.cpp]

1 | class PoolThread : public Thread |

3.2 joinThreadPool

[->IPCThreadState.cpp]

1 | void IPCThreadState::joinThreadPool(bool isMain) |

system_server进程,通过这个while循环来获取并执行binder命令。

3.3 IPC.getAndExecuteCommand

[->IPCThreadState.cpp]

1 | status_t IPCThreadState::getAndExecuteCommand() |

这里system_server的binder线程空闲,停在binder_thread_read方法来处理进程/线程的事务。前面收到BINDER_WORK_TRANSACTION命令,经过binder_thread_read后生成命令cmd=BR_TRANSACTION,再将cmd和数据写回用户空间。

3.4 IPC.executeCommand

[->IPCThreadState.cpp]

1 | status_t IPCThreadState::executeCommand(int32_t cmd) |

对于oneway的情况,执行完本次transact则全部结束

对于非oneway的情况,需要reply的通信过程,则向Binder驱动发送RC_REPLY命令

3.4.1 ipcSetDataReference

[->Parcel.cpp]

1 | void Parcel::ipcSetDataReference(const uint8_t* data, size_t dataSize, |

Parcel成员变量说明:

mData:parcel数据起始地址

mDataSize:parcel数据大小

mObjects:flat_binder_object地址偏移量

mObjectsSize:parcel中flat_binder_object个数

mOwner:释放函数freeBuffer

mOwnerCookie:释放函数所需信息

3.4.2 freeDataNoInit

[->Parcel.cpp]

1 | void Parcel::freeDataNoInit() |

3.4.3 releaseObjects

[->Parcel.cpp]

1 | void Parcel::releaseObjects() |

3.4.4 release_object

[->Parcel.cpp]

1 | static void release_object(const sp<ProcessState>& proc, |

根据flat_binder_object的类型,来减少相应的强弱引用。

3.4.5 ~Parcel

[->Parcel.cpp]

1 | Parcel::~Parcel() |

执行完executeCommand方法后,会释放局部变量Parcelbuffer,则会析构Parcel。接下来,则会执行freeBuffer方法

3.4.6 freeBuffer

[->IPCThreadState.cpp]

1 | void IPCThreadState::freeBuffer(Parcel* parcel, const uint8_t* data, |

向binder驱动写入BC_FREE_BUFFER命令。

3.5 BBbinder.transact

[->binder/Binder.cpp]

1 | status_t BBinder::transact( |

3.5.1 onTransact

[->android_util_Binder.cpp]

1 | status_t onTransact( |

mObject是在服务注册addService过程中,会调用WriteStrongBinder方法,将Binder传入JavaBBinder构造函数的参数,最终赋值给mObject。

gBinderOffsets在int_register_android_os_Binder函数中进行的初始化。

这样通过JNI的方式,从C++回到Java代码,进入IActivityManager.execTransact方法。

[->android_util_Binder.cpp]

1 | static int int_register_android_os_Binder(JNIEnv* env) |

3.5.2 execTransact

[->Binder.java]

1 | // Entry point from android_util_Binder.cpp's onTransact |

3.6 IActivityManager.onTransact

[->IActivityManager.java]

1 | public static abstract class Stub extends android.os.Binder implements android.app.IActivityManager { |

3.7 onTransact$bindService$

[->IActivityManager.java]

1 | private boolean onTransact$bindService$(android.os.Parcel data, android.os.Parcel reply) throws android.os.RemoteException { |

IPC::waitForResponse对于非oneway方式,还在等待system_server这边的响应,只有收到BR_REPLY或者BR_DEAD_REPLY等其他出错的情况下,才会退出waitForResponse。

当bindService完成后,还需要将bindservice完成的回应消息告诉发起端的进程。在3.4节中IPC.executeCommand过程中处理完成BR_TRANSACTION命令的同时,还会通过 sendReply(reply, 0);向Binder Driver发送BC_RELY消息。这里Rely流程不再详细介绍,还是和进入之前相应的流程类似。

3.8 AMS.bindService

[->ActivityManagerService.java]

1 | public int bindService(IApplicationThread caller, IBinder token, Intent service, |

3.9 AS.bindServiceLocked

[->ActiveServices.java]

1 | int bindServiceLocked(IApplicationThread caller, IBinder token, Intent service, |

3.10 AS.bringUpServiceLocked

[->ActiveServices.java]

1 | private String bringUpServiceLocked(ServiceRecord r, int intentFlags, boolean execInFg, |

3.11 AS.realStartServiceLocked

[->ActiveServices.java]

1 | private final void realStartServiceLocked(ServiceRecord r, |

四、服务端进程

4.1 AT.scheduleCreateService

[->ActivityThread.java]

1 | public final void scheduleCreateService(IBinder token, |

通过handler机制,发送消息给服务端进程的主线程的handler处理。

4.2 AT.handleMessage

[->ActivityThread.java]

1 | public void handleMessage(Message msg) { |

4.3 AT.handleCreateService

[->ActivityThread.java]

1 | private void handleCreateService(CreateServiceData data) { |

前面3.11中realStartServiceLocked过程,执行完成scheduleCreateService操作后,接下来,继续回到system_server进程,开始执行requestServiceBindingsLocked过程。

五、system_server进程

5.1 AS.requestServiceBindingsLocked

[->ActiveServices.java]

1 | private final void requestServiceBindingsLocked(ServiceRecord r, boolean execInFg) |

通过bindService方式启动服务,那么ServiceRecord的bindings肯定不会为空。

5.2 AS.requestServiceBindingLocked

[->ActiveServices.java]

1 | private final boolean requestServiceBindingLocked(ServiceRecord r, IntentBindRecord i, |

scheduleBindService通过Binder代理的方式,调用AT的scheduleBindService,其代理对象由IApplicationThread.aidl生成和AMS类似。

六、服务端进程

6.1 AT.scheduleBindService

[->ActivityThread.java]

1 | public final void scheduleBindService(IBinder token, Intent intent, |

发送消息到服务端进程的主线程处理。

6.2 AT.handleMessage

[->ActivityThread.java]

1 | public void handleMessage(Message msg) { |

6.3 AT.handleBindService

[->ActivityThread.java]

1 | private void handleBindService(BindServiceData data) { |

经过Binder IPC进入到system_server进程,并将binder传回到system_server进程。

七、system_server进程

7.1 AMS.publishService

[->ActivityManagerService.java]

1 | public void publishService(IBinder token, Intent intent, IBinder service) { |

服务端的onBind返回的binder对象,在经过writeStrongBinder传递到底层,再回到system_server进程,经过readStrongBinder获取代理对象。

7.2 AMS.publishServiceLocked

[->ActiveServices.java]

1 | void publishServiceLocked(ServiceRecord r, Intent intent, IBinder service) { |

c.conn是指客户端进程的IServiceConnection.Stub.Proxy代理对象,通过BinderIPC调用,进入客户端的IServiceConnection.Stub对象,回到客户端进程中的InnerConnection对象。

八、客户端进程

8.1 InnerConnection.connected

[->LoadedApk.java]

1 | private static class InnerConnection extends IServiceConnection.Stub { |

8.2 SD.connected

[->LoadedApk.java]

1 | public void connected(ComponentName name, IBinder service, boolean dead) { |

8.3 new RunConnection

[->LoadedApk.java]

1 | private final class RunConnection implements Runnable { |

8.4 doConnected

[->LoadedApk.java]

1 | public void doConnected(ComponentName name, IBinder service, boolean dead) { |

九、总结

9.1 通信流程

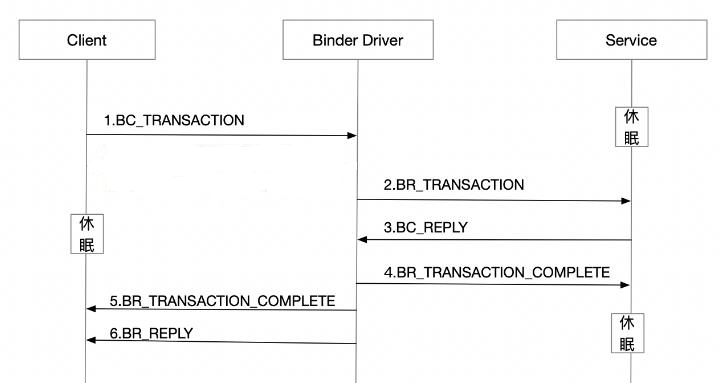

1.发起端线程向Binder驱动发起binder_ioctl请求后,waitForResponse进入while循环,不断进行talkWithDriver,此时该线程处理阻塞状态,直到收到BR_XX命令才会结束该过程。

- BR_TRANSACTION_COMPLETE: oneway模式下,收到该命令则退出;

- BR_DEAD_REPLY: 目标进程/线程/binder实体为空, 以及释放正在等待reply的binder thread或者binder buffer;

- BR_FAILED_REPLY: 情况较多,比如非法handle, 错误事务栈, security, 内存不足, buffer不足, 数据拷贝失败, 节点创建失败, 各种不匹配等问题;

- BR_ACQUIRE_RESULT: 目前未使用的协议;

- BR_REPLY: 非oneway模式下,收到该命令才退出;

2.waitForResponse收到BR_TRANSACTION_COMPLETE,则直接退出循环,不会执行executeCommand方法,除上述五种BR_XXX命令,当收到其他BR命令,则会执行executeCommand方法。

3.目标Binder线程创建之后,便进入joinThreadPool方法,不断循环执行getAndExecuteCommand方法,当bwr的读写buffer没有数据时,则阻塞在binder_thread_read的wait_event过程。正常情况下binder线程一旦创建就不会退出。

9.2 通信协议

1.Binder客户端和服务端向Binder驱动发送的命令都是以BC_开头,Binder驱动向服务端或客户端发送的命令都是以 BR _开头;

2.只有当BC_TRANSACTION或BC_REPLY时,才会调用binder_transaction来处理事务,并且都会回应调用者BINDER_WORK_TRANSACTION_COMPLETE,经过binder_thread_read转变成BR_TRANSACTION_COMPLETE;

3.bindServie是一个非oneway过程,oneway过程没有BC_REPLY。

9.3 数据流

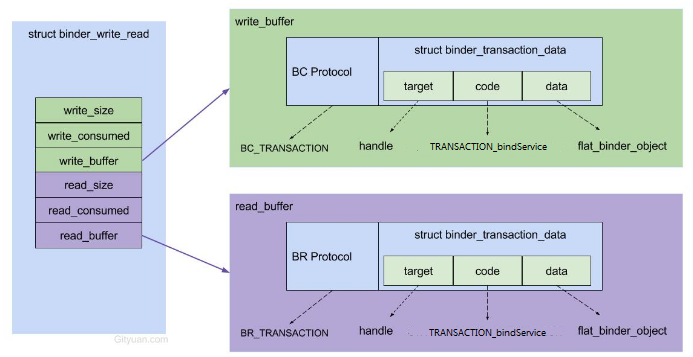

用户空间(下面一些方法在addservice篇中介绍)

1.bindService:组装flat_binder_object等对象组成Parcel data;

2.IPC.writeTransactionData:组装BC_TRANSACTION和binder_transaction_data结构体,写入mOut;

3.IPC.talkWithDriver:组装BINDER_WRITE_READ和binder_writer_read结构体,通过ioctl传输到驱动层。

进入驱动后

4.binder_thread_write:处理binder_write_read.write_buffer数据

5.binder_transaction:处理write_buffer.binder_transaction_data数据

- 创建binder_transaction结构体,记录事务通信的线程来源以及事务链条等相关信息;

- 分配binder_buffer结构体,拷贝当前线程binder_transaction_data的data数据到binder_buffer->data;

6.binder_thread_read:处理binder_transaction结构体数据

- 组装cmd= BR_TRANSACTION和binder_transaction_data结构体,写入binder_write_read.read_buffer数据。

回到用户空间

7.IPC.executeCommand:处理BR_TRANSACIOTN命令,将binder_transaction_data数据解析成BBinder.transact所需的参数

8.onTransact:层层回调,进入该方法,反序列化数据后,调用bindService方法。

附录

源码路径

1 | frameworks/base/core/java/android/app/ContextImpl.java |